Evolution of digital radio

Amplitude modulation (AM) was the dominant form of radio broadcasting during the first 80 years of the 20th century, but channel fading, distortion and noise led to poor reception quality.

These problems were reduced to some extent with the introduction of frequency modulation (FM), which could also provide stereo transmission and CD-quality audio, but analog radio was still not devoid of channel imperfection effects and limited coverage area.

During 2003, two commercial startups, XM and Sirius (which later merged to become SiriusXM), introduced the huge footprint of subscription-based digital satellite radio in the United States, with a revenue model similar to that of pay-TV channels. Around the same time, WorldSpace Radio started satellite broadcasts for Asia and Africa.

The Satellite Digital Audio Radio Services (SDARS) enabled mobile car audio listeners to tune into the same radio station anywhere within the satellite’s coverage map, limited only by intermittent blockage of satellite signal due to buildings, foliage and tunnels.

XM satellite radio took the lead in circumventing the blockage problem by installing terrestrial repeaters, which transmit the same satellite audio in dense urban areas and create a hybrid architecture of satellite and terrestrial broadcasts.

Around the same time, the traditional terrestrial broadcasters also charted a digital course, for two reasons. First, they perceived that their lifespan on the analog concourse had to be quite short, as the world migrates to the higher-quality digital runway.

Second, the frequency spectrum is getting scarce, so additional content within the same bandwidth could be delivered only by digitising and compressing the old and new content, packaging it and then broadcasting it. Thus, the world started migrating from analog to digital radio.

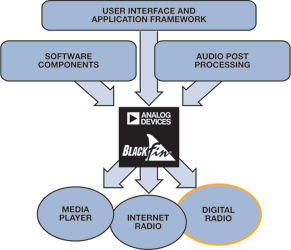

These techniques for radio broadcast had the advantages of clearer reception, larger coverage area and the ability to pack more content and information within the existing bandwidth of an available analog radio channel, as well as offering users increased control flexibility in accessing and listening to programme material (Figure 1).

Digital radio development example: India

In terrestrial broadcasting, there are two open standards – Digital Multimedia Broadcasting (DMB) and Digital Radio Mondiale (DRM) – and HD Radio, a proprietary standard from iBiquity (the only standard approved by the FCC for AM/FM audio broadcasting within the United States).

DMB specifies several formats for digital audio broadcasting, including DAB, DAB+ and T-DMB, which use VHF Band III and L-band. DRM uses DRM30, which operates from 150 kHz to 30 MHz, and DRM+ in VHF Bands I, II and III.

Useful propagation in the VHF bands is essentially limited to line-of-sight in small geographic regions. Propagation in short wave, on the other hand, can go almost anywhere in the world due to multiple reflections in the ionosphere.

For countries that are densely populated and have small geographic regions, DMB transmitting in VHF Band III and L-band functions very efficiently. For countries that have large geographic areas, transmissions in medium- and short-wave provide effective coverage. For this reason, after a few years of trials of DAB and DRM, India decided to adopt DRM.

During 2007, All India Radio (AIR), Asia-Pacific Broadcasting Union (ABU) and the DRM Consortium conducted the first field trial for DRM in New Delhi. The experimental trial was conducted over three days with three transmitters, with measurements of various parameters. Besides these tests in New Delhi, AIR also did these measurements at long distances.

It became clear that DRM had the advantage of serving a larger population with a limited number of transmitters. In addition, the increasing need for energy conservation raises power saving considerations to paramount importance. DRM’s 50% greater power efficiency plays a vital role in supporting the ecology and a ‘greener’ Earth.

Digital radio receivers and DSP

The physical world is analog, yet scientists and engineers find it easier to do a lot of computation and symbol manipulation in the digital domain. Thanks to sampling theory, signal processing and available data converters, the way is smoothly paved for engineers to design, implement and test complex digital signal-processing (DSP) systems using analog-to-digital converters (ADCs) and digital signal processors with programmable cores.

Development of powerful and efficient DSPs – along with advancements in information and communication theory – enabled the convergence of media technology and communications. Digital radio owes its existence to these technological advances.

Digital radio receivers were initially designed as lab prototypes and then moved to pilot production. Like most technologies, the first-generation products are generally assembled using discrete components. As the market size and competition increase, manufacturers find that markets can be further expanded by bringing down the price of the finished product.

The prospect of higher volume attracts semiconductor manufacturers to invest in integrating more of these discrete components to bring down the cost. With time, the shrinking silicon geometries led to further cost reductions and improvements in the product’s capability. Such has been the continuing evolution in many products, including FM radios and mobile phones.

Signal processing in digital radio

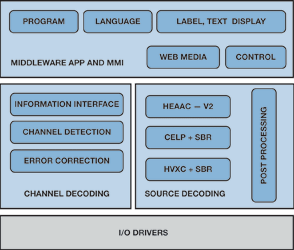

A typical digital communication system (Figure 2) converts the analog signal into digital, compresses it, adds error-correction code and packs several signals to make best use of the channel capacity. To transmit RF signals (which exist in the ‘real’ world of analog energy), the digital signal is converted into analog and modulated on a carrier frequency for transmission.

At the receiver, the reverse process takes place, starting with demodulating the carrier frequency. The signal is then converted to digital, checked for errors and decompressed. The baseband audio signal is converted to analog, ultimately producing acoustic sounds.

Signal processing algorithms in a digital radio receiver can be classified into the following categories:

* Channel decoding.

* Source decoding.

* Audio post processing.

* Middleware.

* User interface (MMI).

In digital radio, the source coding and channel coding can respectively be mapped to an efficient audio codec (coder-decoder) and error control system components. Practically, error control can be performed better if the codec is designed for error resilience.

An ideal channel coder should be resilient to transmission errors. An ideal source coder should compress the message to the highest information content (Shannon entropy), but highly compressed messages would lead to very high audio distortion if the input stream contains errors.

Thus, effective source coding should also ensure that the decoder can detect the errors in the stream and conceal their impact so that overall audio quality is not degraded.

DRM applies relevant technological innovations in source coding and channel coding to deliver a better audio experience. The DRM audio source coding algorithm that is selected ensures efficient audio coding (higher audio quality with lower bit rate) as well as better error resilience (aesthetic degradation under transmission errors).

Efficient audio source coding

Motion Picture Experts Group (MPEG) technology can be considered as the conduit and framework for effective collaboration of academic, industry and technology forums. Success of such collaborative audio-specific efforts as MPEG Layer II, MP3 and AAC (Advanced Audio Coding) for broadcasting and storage/distribution, respectively, has encouraged the industry to engage in further research initiatives.

MP3 continues to be the most popular ‘unofficial’ format for web distribution and storage, but simpler licensing norms – and Apple’s decision to adopt AAC as the media form for the iPod – have helped AAC to get more industry attention than MP3.

Let us consider AAC from the MPEG community to understand some of the important technologies involved in source coding.

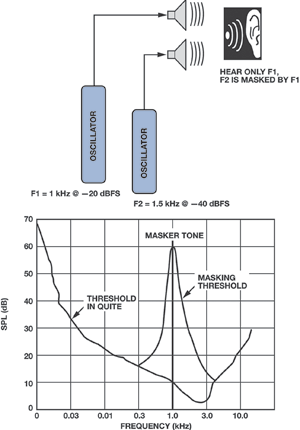

Psycho acoustic model (Figure 3) and time-domain alias cancellation (TDAC) can be considered as two initial breakthrough innovations in wideband audio source coding.

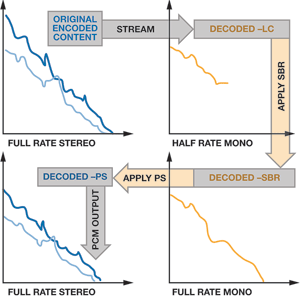

Spectral band replication (SBR, Figure 4) and spatial audio coding or binaural cue coding techniques from industry and academia can be considered as the next two game-changing innovations.

These two key breakthrough innovations further enhanced AAC technologies to give scalable coding performance, which resulted in standardisation of HE-AAC v2 and MPEG surround – which received overwhelming responses from the industry. Industry-driven standards, like Dolby, AC3 and WMA, also took similar steps to leverage similar technological innovations for their latest media coding.

The spectral band replacement (SBR) tool doubles the decoded sample rate relative to the AAC-LC sample rate. The parametric stereo (PS) tool decodes stereo from a monophonic LC stream.

Like any other improvement initiatives, measurement technologies also played their role in audio quality improvement initiatives. Audio quality evaluation tools and standards, like perceptual evaluation of audio quality (PEAQ) and multi-stimulus with hidden reference and anchor (MUSHRA), aided faster evaluation of technological experiments.

References

Feilen, Michael. 'The Hitchhikers Guide to Digital Radio Mondiale (DRM)'. The Spark Modulator, 2011.

Subrahmanyam, T.V.B., and Mohammed Chalil. 'Emergence of High Performance Digital Radio'. Electronics Maker, pp. 56-60, November 2012. www.electronicsmaker.com

Part 2 will appear in a future edition of Dataweek.

© Technews Publishing (Pty) Ltd | All Rights Reserved