The digital multimeter or DMM is one of the most widely used electrical measurement instruments and provides electrical traceability to many users. However, it is the nature of measurement products that they require regular checks to ensure that they operate not only in a functional sense, but also within their specifications.

Such regular checks are known as calibrations and require a certain amount of knowledge of DMM technology in order to calibrate them effectively. This article seeks to identify the important functional and calibration parameters and to offer a generic guidance strategy for their routine verification. Readers should note that this article is a summary of a more comprehensive application note, which is available for viewing at www.dataweek.co.za/+dw8887

DMM specifications

A DMM manufacturer has two important goals when specifying a DMM. It must ensure that the performance and functionality are clearly presented to the prospective purchaser such that the purchasing decision can be made, and also ensure that the instrument’s metrological performance is expressed to the best possible advantage in a competitive situation.

The specification is actually describing the performance of the instrument’s internal circuits that are necessary to achieve the desired functionality. Each function will have an accuracy expressed in terms of a percentage of the reading ± %R with additional modifiers or adders such as ± digits or ± μV. This is known as a compound specification and is an indication that there are several components to the specification for any particular parameter.

Sometimes this is seen as a way of hiding the true performance, when in reality, it is the only practical way to express performance over a wide parametric range with a single accuracy statement. Over the 40 years or so that DMMs have developed, the terminology has evolved to describe the performance parameters.

Performance parameters

It is convenient to think of the performance in terms of functional and characteristic parameters, where the former describes the basic capability or functionality (eg, the DMM can measure voltage) and the latter is a descriptor or qualifier of its characteristics (eg, with an accuracy of ±5 ppm). One can easily see that it is essential to understand the significance of the characteristics if the instrument is to be verified correctly.

Examples of functional parameters include functions such as V, A, Ω, scale length/resolution, read rate, amplitude range and frequency range. Examples of characteristics include stability with time and temperature, linearity, noise, frequency response or flatness, input impedance, compliance/burden, common/series mode rejection, crest factor and power coefficient.

DC voltage

Nearly all DMMs can measure DC voltage. This is because of the nature of the analog to digital converter (ADC) used to convert from a voltage to (usually) timing information in the form of clock counts. The most common way of doing this is to use a technique known as dual-slope integration.

This method involves applying the input signal to an integrator for a fixed period and then discharging the integrator capacitor and measuring the time taken to do so. This basic dual slope method is used in low-resolution DMMs, but longer scale length instruments require more complex arrangements to ensure good performance.

DMMs are available with up to 8½ digits resolution and these usually employ

multi-slope, multi-cycle integrators to achieve good performance over the operating range. An ADC is usually a single-range circuit, that is to say it can handle only a narrow voltage range of say 0 to ±10 V; however, the DMM may be specified from 0 to ±1000 V. This necessitates additional circuits in the form of amplifiers and attenuators to scale the input voltage to levels that can be measured by the ADC.

In addition, a high input impedance is desirable such that the loading effect of the DMM is negligible. Each amplifier and attenuator or gain-defining component introduces additional errors that must be specified. The contributions that affect the specifications for DC voltage are given below together with their typical expression in parenthesis:

* Reference Stability (% of reading).

* ADC linearity (% of scale).

* Attenuator stability (% of reading).

* Voltage offsets (absolute).

* Input bias current (absolute).

* Noise (absolute).

* Resolution (absolute).

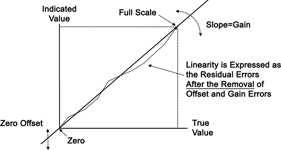

These contributions will be combined to give a compound specification expressed as ± % reading ± % full scale or range ± μV. In order that the performance of the instrument can be verified by calibration, the above effects must be isolated. That is to say, it is not possible to measure linearity, for example, until the effects of offset and gain errors have been removed. Figure 1 shows these basic parameters.

The ADC is common to all ranges of all functions, therefore its characteristic errors will affect all functions. Fortunately, this means that the basic DC linearity need only be verified on the basic (usually 10 V) range.

The manufacturer’s literature should indicate which is the prime DC range. If this is not stated directly, it can be deduced from the DC voltage specification ie, the range with the best specification in terms of ± % R, ± FS and ± μV will invariably be the prime range. Other ranges eg, 100 mV, 1 V, 100 V and 1 kV will have a slightly worse performance because additional circuits are involved. At low levels on the 100 mV and 1 V ranges, the dominant factor will be noise and voltage offsets.

For the higher voltage ranges, the effects of power dissipation in the attenuators will give a power law characteristic, the severity of which will depend on the resistor design and individual temperature coefficients.

Knowledge of the design and interdependence of the DMM’s functional blocks can greatly assist the development of effective test strategies.

DMM functionality tree

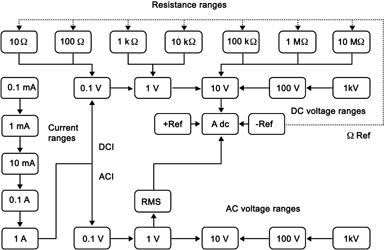

A DMM’s functionality and range dependence is quite logical and is generally designed to get the maximum use out of a minimum of components through the use of common circuits wherever possible. As an example, the ADC will be used for all functions, the current sensing resistors will be used for both AC current and DC current, the AC rms converter will be used for both AC voltage and AC current. A typical functionality ‘tree’ is given in Figure 2.

DC voltage calibration strategy

For a new DMM, the manufacturer will test every aspect of the instrument’s performance. However, for routine repeat calibrations, the number of tests can be dramatically reduced if one accepts that instruments can be characterised according to their technology, operating principles and accumulated historical data.

It will usually be necessary to calibrate each DC voltage range in both polarities. The prime range, where there is neither gain nor attenuation of the input voltage, will typically be 10 V. This will be the first range to be measured, as its errors will also be passed on to the other ranges.

Calibration would then progress to the lower ranges of 1 V and 100 mV, then on to the 100 V and 1 kV ranges. These are left until last because of the effects of increasing power dissipation on both the DMM and the calibration source at the higher voltages.

The order of the tests might be as follows:

* 10 V zero, +10 V gain, -10 V gain.

* 10 V linearity: ±1 V, ±5 V, ±15 V, ±19,9 V.

* 1 V zero, +1 V gain, -1 V gain.

* 100 mV zero, +100 mV gain.

* -100 mV gain.

* 100 V zero, +100 V gain, -100 V gain.

* 1 kV zero, +1 kV gain, -1 kV gain.

Careful analysis of the results can reveal much about the instrument’s performance. Changes in the error of the 10 V range will also be passed on to other dependent ranges. Therefore if the 10 V range was found to have increased by +5 ppm and the 100 V range showed no change, it means that the 100 V range had actually changed by -5 ppm but was compensated by the 10 V range error.

This kind of evaluation can be very useful if failures against specification are observed. If the 100 V range indicated a change of +10 ppm and the specification allowance was ±5 ppm, at first glance the 100 V range gain-defining components might be thought to be defective, when in fact the failure was caused by the instrument’s internal reference. Either way, the 100 V range has failed against the specification, but the cause of the problem has nothing to do with the 100 V range components.

Without this knowledge, the wrong components might be replaced, resulting in loss of history and additional expense.

Functional tests

It is a good idea to carry out essential functional testing before committing valuable time and effort to a potentially lengthy calibration process. Some microprocessor-controlled DMMs have a diagnostic self-test function that can be used to perform sophisticated internal checks.

If such a facility is not available, each function and range should be selected and a copper short, or known voltage, resistance or current applied to the DMM’s input. This will ensure that a stable reading can be obtained and that the controls and displays are operating correctly.

Functional checks may be extended to cover input switching circuits, auxiliary outputs and remote-control digital interface if applicable. If an IEEE-488 interface is fitted and the DMM is one of a type that will be regularly calibrated, it may be worth writing a simple program to perform the functional testing. If a functional problem is found, a decision must be made as to whether it is safe to proceed with a pre-adjustment calibration check.

This is desirable if historical performance data is required, but may also assist with fault diagnosis. However, if there is any risk of personal injury or damage to the instrument, it is better to repair the instrument before proceeding with the calibration.

Input characteristics

There should be a periodic test to ensure that the DMM’s input characteristics are correct. The test requires the use of an LCR bridge or relatively low-accuracy DMM. The object of the test is to ensure that there are not gross errors in the input characteristics.

Note that it is essentially a functional test looking for percentage rather than ppm errors. As an example, a common AC V input resistance is 1 MΩ. The test will be to ensure that it is not excessively high or low – say within ±10%. For most instruments, the AC input resistor will form part of the input attenuator, so significant changes in value will be obvious when the calibration is verified.

The DC V function input resistance measurement can be more difficult. For higher ranges like 100 V to 1 kV, there will be an input attenuator of typically 10 MΩ that determines the input resistance and voltage division ratio. Changes in the value of this component will cause changes in the DC V calibration.

However, for lower ranges, the input resistance will probably be determined by feedback and so may be very high indeed. In fact it may be so high that it is not possible to measure reliably.

The usual reason for checking the input characteristics is to ensure that they do not significantly disturb the test circuit conditions. This is sometimes described as being non-intrusive.

For current measurements, it is the burden voltage that is important. In a DMM, the burden is determined by the value of the current sensing resistors and can be measured as a resistance between the current sensing terminals. Typically, the resistors will be chosen such that the burden is 0,1 V or lower.

For the resistance function, there are two parameters of interest. The first is the input resistance of the voltage sensing terminals (assuming 4-wire capability).

This will usually be very high indeed and may be the same as that for the lower DC V ranges. Any change in input resistance will be directly reflected in the resistance function accuracy and so it is not normally necessary to measure it.

The second parameter is the compliance of the DMM’s current source. Ideally, the current flowing through the external resistance being measured should be independent of the voltage developed across the resistor – a true constant current source with infinite output impedance. However, if the current does vary, it will affect the resistance function’s linearity.

Zero considerations

All DC functions will need their zero offsets evaluated and compensated before measurements of the gain values are made. Generally, it is the gain values that transfer traceability. This is recognition of the fact that zero is really only a baseline for the measurements.

It is important that all DC measurements are referred to a known offset state, but that state does not have to be exactly zero. In fact, at normal room temperatures, it is very difficult to achieve a true zero in terms of volts, amps or ohms. Consideration of zero offsets should also include the calibration source, as all sources have residual offsets.

From Figure 1 it can be seen that an offset will affect all readings by a fixed amount. It is very important that a ‘system’ zero is performed when a DMM is being calibrated. A system zero means zeroing the DMM to the zero offset of the calibration standard. This will remove the effects of the standard’s offset from the measurement of the gain or full-range values.

For voltage measurements the DMM should be zeroed to the source ‘zero’ output. For resistance measurements the DMM should be zeroed to the resistor (as described in the user’s handbook) such that the effects of voltage offsets in the Hi and Lo circuit are removed. Current measurements require the DMM to be zeroed in the open-circuit state, although some calibration sources have a ‘zero’ current output to which the DMM should be zeroed.

Generating a test plan

After considering the preceding discussions and the intended application of the DMM, a test plan can be devised. The plan must ensure that the basic calibration requirements of the instrument and user are met, that the measurements are made using standards of sufficient accuracy, and that the functionality and integrity of the instrument are verified.

The plan must also consider actions to be taken if the instrument requires repair. It is likely that there will be two strategies depending on whether or not a fault condition is suspected or has been reported.

An example routine calibration (no repair) checklist might look as follows:

* Have any problems been reported?

* Functional checks, controls, etc.

* Measure prime DC and AC linearity.

* Measure all gain values.

* Adjust as required.

* Check volt/hertz limits, etc.

Additional requirements if repaired might include:

* * Adjust ADC if required.

* Adjust line locking if required.

* Adjust crest factor if applicable.

* Check input characteristics.

* Check CMRR.

Measurement uncertainty

The most significant contribution to the quality of DMM calibration is consideration of the measurement uncertainty. It is beyond the scope of this article to give a detailed analysis of the uncertainty contributions and their combination, but it is worth identifying what the sources of uncertainty will be:

* Uncertainty of the standard.

* Resolution of the DMM.

* Short-term stability of the DMM with time and temperature.

* Combined noise of standard and DMM.

* The calibration procedure.

It is imperative that the calibration standard is of sufficient accuracy to be able to calibrate the DMM with confidence. Some manufacturers separate DMM specifications into two parts: the calibration uncertainty and the instrument’s relative accuracy. If the available calibration uncertainty is larger (worse) than the specified calibration accuracy, the DMM’s total accuracy specification will no longer apply.

Sometimes test accuracy ratios (TAR) are quoted as a requirement ie, a TAR of 4:1 requires the accuracy of the standard to be four times better than the specification of the DMM. This is to ensure that the residual errors of the standard do not significantly affect the calibration accuracy of the DMM.

In an ideal world, TARs could be applied to all measurements – including those made by national standards laboratories. However, it is not practical at this level, or even necessary, if all sources of error have been identified and corrected and that a sound uncertainty analysis has been performed. Where such corrections are not applied, or where there is no calibration accuracy requirement specified, TARs are appropriate.

The resolution or scale-length of the DMM determines the smallest change in the reading that may be observed. Clearly, it could become a limiting factor in the measurement regardless of how accurate the calibration standard might be. Assuming the calibration of 1 V d.c., a 6½ digit DMM with a scale-length of 1,000 000 V can resolve 1 μV or 1 ppm of its nominal range, whilst a 4½ digit DMM can only resolve 100 μV or 0,1% for the same conditions.

The short-term stability of the DMM (and the calibration standard) with time and temperature will also affect the uncertainty of measurement. Usually the dominant factors here are temperature coefficient of the DMM and stability of the calibration environment. Secular drift is not usually significant unless the calibration takes several hours or the instrument is defective. An allowance for temperature coefficient and short-term stability can usually be obtained from the manufacturer’s specification data.

A more dominant uncertainty contribution will usually be the noise or run-around of the DMM reading during the measurement. Whilst it may be interesting to consider the individual noise contributions of the standard and the DMM, in practice it is their combined effect that is important. If individual readings can be easily observed, and if the noise is predominantly random, the sample standard deviation of the readings can be calculated and used as the uncertainty contribution for combined noise.

It is important that the configuration of the DMM is representative of normal use for this measurement.

Finally, the calibration procedure itself will have an influence on the measurement uncertainty. A poorly chosen test sequence, insufficient settling time or poor interconnection techniques will all introduce additional errors that may pass unnoticed by the operator. This is the reason why the manufacturer’s recommended procedure should be used as the basis for DMM calibration. Note that this also applies to the use of the calibration standards.

For insight into other topics such as resistance, AC voltage, and DC and AC current calibration, view the technical article at www.dataweek.co.za/+dw8887

For more information contact Comtest, +27 (0)11 608 8520, [email protected], www.comtest.co.za

| Tel: | +27 10 595 1821 |

| Email: | [email protected] |

| www: | www.comtest.co.za |

| Articles: | More information and articles about Comtest |

© Technews Publishing (Pty) Ltd | All Rights Reserved